T Ashok @ash_thiru on Twitter

Summary

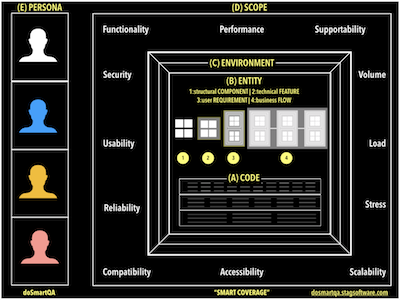

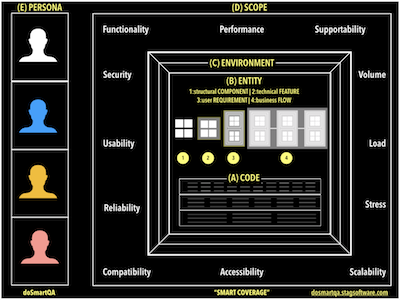

Coverage, an indicator of test effectiveness is really multidimensional and has not been dealt with rigour most often. This article outlines a “Smart coverage framework” that looks at coverage from multiple angles summarising it as a beautiful picture.

We are always challenged on the sufficiency of test cases. The cliched notion of code coverage is sadly insufficient, being an unidimensional measure. Sufficient, it is but definitely not enough. Other notions of coverage like RTM, test coverage have not been given the full rigour to enable making objective assessments.

Given the objective of rapid assessment here is a simple yet complete smart coverage framework that takes a full 360 degree view .

(A) Code coverage

At structural level is CODE coverage to assess if all code present have indeed been executed. This ensures that all code aspects (lines, conditions, paths, functions, classes, files) have indeed been executed/validated at least once. It is important to understand that this is done by executing functionality test cases, and is a measure of completeness of functional test cases; more often at the earlier level of tests. Note that missing code i.e unimplemented functionality cannot be detected by this.

(B) Entity coverage

At a behavioural level is ENTITY coverage, to assess if all entities and all types of entries (the lower level COMPONENT to higher level FLOW) have be validated (i.e have test cases for all types of entities). A system is a composition of various types of entities that have well integrated. It is only logical to ensure that each entity, be it the lower level entity like the component, feature OR higher level entities like requirements, flows have indeed been validated. What this does is to ensure that we have looked from the viewpoint of ‘ in-the-small’ and ‘in-the-large’ and that all of them have been validated.

(C) Environment coverage

Have we planned to validate on all ENVIRONMENTs that matter? An environment is really a combination of various elements like OS, Browser, Database, maybe HW Devices etc. Environment coverage is ensuring that we have validated on all various environments that matter.

(D) Test coverage

Do we have test cases that span different types of tests so that the full SCOPE expected can be evaluated? The scope is really a combination of functional correctness (functionality) and non-functional aspects(attributes). This demands that we perform a variety of different tests, and test coverage is precisely to ensure that we have executed all types of tests that indeed matter.

(E) Persona coverage

Finally do we have scenarios from a PERSONA view so that we can indeed ascertain correctness from user POV? Given that we have validated the product from various angles of code, environment, entity and test types the final straw is looking at from the end consumer. Persona coverage really is ensuring that we have validated the system from POV of various persona (actors really as not end users may be human).

Coverage is not an assessment to be used post design, treat it as one that drives rapid effective design right from start so that we can continually steer tests to be effective & efficient.

In the current context where terminologies like unit testing and integration testing has no meaning you have explained so clearly the levels of testing and types of testing. The challenge is the place or order for environment test coverage. When do you squeeze this? I thought an automated positive flow persona test coverage may be a good choice. My reason is environment coverage costs lot of effort and money no point in testing error recovery or negative tests on all possible environments unless field defects analysis proves our strategy is wrong. Your thoughts please?

Dear Nagaraj- Thank you, glad you liked it. Yes, I agree that env tests are expensive and benefit from automation. Certainly positive scenarios need to be tested on various environments. As for negative scenarios, in addition to your suggestion of basing it on field defect analysis, it may be important to appreciate the type of potential issue(test type) and appropriately choose the negative scenarios. Note that scenarios are not limited to functionality alone and hence how an certain handles security, load etc may be a tad different and therefore some of negative scenarios may prove to be interesting.